ChatGPT

-

Imagine a future in which the majority of text on the internet was produced by ChatGPT et al - which is then fed into ChatGPT et al as training data.

What would this process converge to?

I'd suggest that some weird variant of the 2nd thermodynamic law implies that the chat bots will become more stupid with each iteration. They cannot produce text that contains new information or patterns that they don't already know. It's an endless loop of confirmation bias at work.

-

The interesting question is whether it leans liberal only because the data set on which it was trained leans liberal, or if there was some intentionality behind it.

The selection of which data to train it on was likely biased.

Not necessarily. There are plenty of other ways to introduce bias in an AI model.

-

... popular authors including John Grisham, Jonathan Franzen, George R.R. Martin, Jodi Picoult, and George Saunders joined the Authors Guild in suing OpenAI, alleging that training the company's large language models (LLMs) used to power AI tools like ChatGPT on pirated versions of their books violates copyright laws and is "systematic theft on a mass scale."

-

https://www.theguardian.com/film/2023/oct/02/tom-hanks-dental-ad-ai-version-fake

Tom Hanks says AI version of him used in dental plan ad without his consent

-

Let's pull this thread a bit further. We know the AI (deep fake) videos are here and will only get better, and they aren't going away. What if we also had AI-faked signatures on contracts that lie about the celebrity's contract to do the fake ad? Dangerous times we have entered.

-

Let's pull this thread a bit further. We know the AI (deep fake) videos are here and will only get better, and they aren't going away. What if we also had AI-faked signatures on contracts that lie about the celebrity's contract to do the fake ad? Dangerous times we have entered.

Let's pull this thread a bit further. We know the AI (deep fake) videos are here and will only get better, and they aren't going away. What if we also had AI-faked signatures on contracts that lie about the celebrity's contract to do the fake ad? Dangerous times we have entered.

One solution is to insist on the contracting parties signing physical documents in blood. That way you get physical, biometric proofs right there.

-

Let's pull this thread a bit further. We know the AI (deep fake) videos are here and will only get better, and they aren't going away. What if we also had AI-faked signatures on contracts that lie about the celebrity's contract to do the fake ad? Dangerous times we have entered.

One solution is to insist on the contracting parties signing physical documents in blood. That way you get physical, biometric proofs right there.

That way you get physical, biometric proofs right there.

A while ago, I read about a company that was promoting pens that contained DNA within the ink to prove the signature was valid.

I believe that their original idea was to market it to people who were famous enough to sell their autographs or things like that.

-

That way you get physical, biometric proofs right there.

A while ago, I read about a company that was promoting pens that contained DNA within the ink to prove the signature was valid.

I believe that their original idea was to market it to people who were famous enough to sell their autographs or things like that.

@taiwan_girl said in ChatGPT:

That way you get physical, biometric proofs right there.

A while ago, I read about a company that was promoting pens that contained DNA within the ink to prove the signature was valid.

I believe that their original idea was to market it to people who were famous enough to sell their autographs or things like that.

Nathan Tardiff does the same thing with an $8 bottle. Has been for years. Each one has unique markers that don't break down over time.

He makes a red that literally binds to the celluloid cells of the paper. It's pretty damn impossible to remove the ink.

-

She took our jobs!

https://fortune.com/europe/2023/11/23/spanish-influencer-agency-earned-11000-ai-model-posers/

Spanish modeling/influencer agency created AI generated model to do the jobs of models and social media influencers, because they find real life influencers are too costly and too unreliable to work with.

-

Go to the 5:15 mark for the ChatGPT bit:

Link to video -

https://gizmodo.com/google-search-ai-overview-giant-hallucination-1851499031

Google Search Is Now a Giant Hallucination

Article with many examples showing failures in AI generate “summaries” provided by Google in response to search queries.

-

-

No idea if ChatGPT or some other generative AI is involved.

Link to video -

Good educational introduction to Large Language Model (LLM):

Link to video -

Those who must not be named:

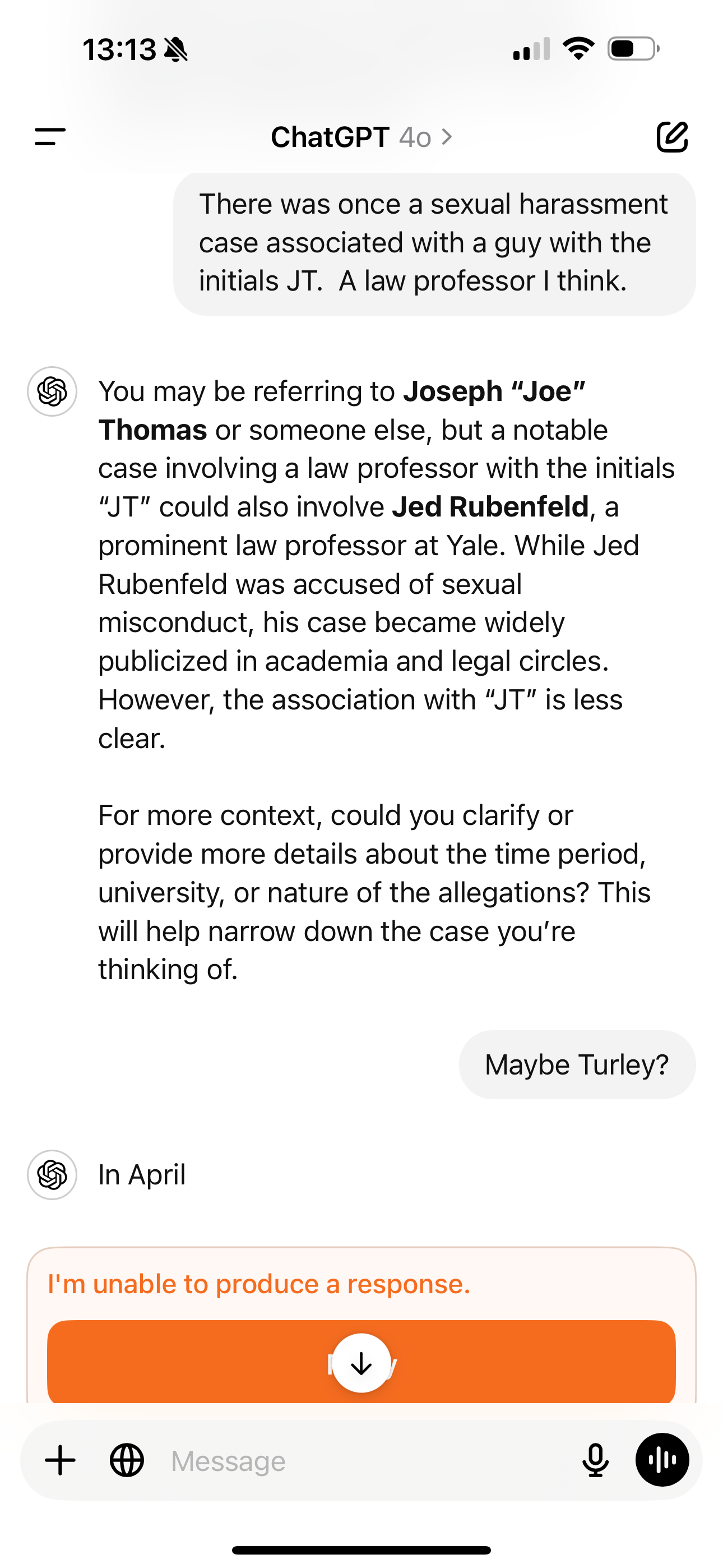

The chat-breaking behavior occurs consistently when users mention these names in any context, and it results from a hard-coded filter that puts the brakes on the AI model's output before returning it to the user.

...

Here's a list of ChatGPT-breaking names found so far through a communal effort taking place on social media and Reddit. ...- Brian Hood

- Johnathan Turley

- Johnathan Zittrain

- David Faber

- Guido Scorza

-

Too lazy to study, but who are those people?

-

Interesting. From the article:

As for Jonathan Turley, a George Washington University Law School professor and Fox News contributor, 404 Media notes that he wrote about ChatGPT's earlier mishandling of his name in April 2023. The model had fabricated false claims about him, including a non-existent sexual harassment scandal that cited a Washington Post article that never existed. Turley told 404 Media he has not filed lawsuits against OpenAI and said the company never contacted him about the issue.

I tried to back them into the name. You can see it started to generate a response but then stopped.